I. The New Imperatives of EdTech

The EdTech sector has witnessed an unprecedented evolution in the last few years. The shift toward remote learning, hybrid classrooms, and mobile-first engagement has dramatically changed how learners interact with content. Students no longer want one-size-fits-all content; they expect a tailored, adaptive learning journey—one that aligns with their pace, style, and knowledge gaps.

For educational institutions and content providers, this creates both opportunity and pressure. AI-powered analytics, personalized learning paths, and real-time performance feedback are becoming table stakes. But enabling these capabilities requires more than feature additions. It demands a platform that is architected from the ground up for data observability, real-time processing, and machine learning integration.

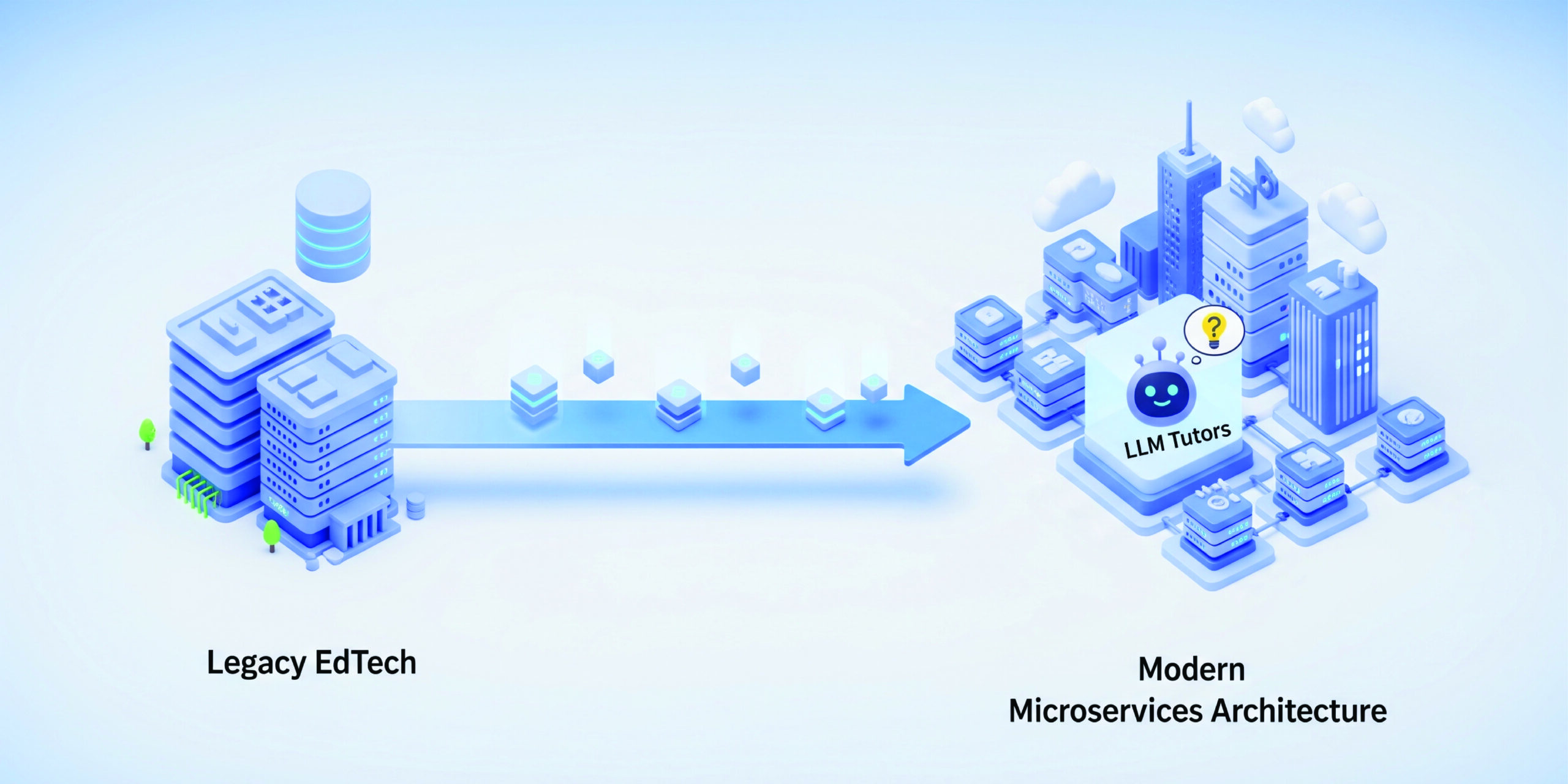

Most legacy LMS and learning platforms, originally built to deliver static content, are ill-equipped to support these needs. To remain competitive and learner-centric, enterprises must consider re-architecting their platforms for cloud scalability and AI readiness.

II. The Problem with the Legacy Platform

The platform in question was a widely adopted LMS that had served the organization well for over a decade. But its monolithic design had become a bottleneck.

- Inflexible Codebase: Even minor enhancements required coordination across multiple teams and full-stack redeployments, slowing the velocity of new feature rollouts.

- Poor Real-Time Capabilities: All data pipelines were batch-oriented, making it impossible to support real-time dashboards, in-session personalization, or time-sensitive interventions.

- Lack of Observability: Data generated during student interactions (clicks, scrolls, quiz answers) was not logged in a structured, usable manner.

- AI Isolation: Any effort to introduce ML models had to go through awkward integrations or side-car services, creating latency and reliability challenges.

- Limited Scalability: As concurrent user volume grew during peak learning hours, system response times deteriorated, causing friction for learners and instructors alike.

It was clear the legacy LMS couldn’t scale into the future.

III. Re-Architecting for AI Readiness: The Game Plan

The objective was not just to modernize, but to make the platform fundamentally AI-native. This required transforming both the infrastructure and data architecture.

Key Decisions & Execution Strategy:

- Service-Oriented Decomposition: We broke down the LMS into modular, independently deployable services. Core services included: User Profile, Content Delivery, Quiz Engine, Session Tracking, and Analytics.

- Stateless & Scalable: Most services were designed to be stateless and deployed in containers using Kubernetes or Azure Kubernetes Service (AKS), enabling on-demand horizontal scaling.

- Event-Driven Architecture: Every user action—starting a video, answering a question, navigating a lesson—was published to an event bus, laying the foundation for real-time processing.

- Decoupled Learning Modules: Learning flows (courses, quizzes, assessments) were abstracted as reusable components to support dynamic curriculum generation.

- Multi-Tier Data Capture:

- Frontend event tracking (mouse movements, page scrolls, time-on-task)

- Quiz and assignment results with metadata

- Media consumption metrics (watch duration, skip rates, pause points)

This re-architecture allowed data and engagement events to flow in near real-time to the backend, forming the backbone for downstream ML processing.

Cloud Stack & AI Workflow Integration

The new architecture was deployed entirely on cloud-native infrastructure, with deliberate choices made to support scale, observability, and ML-readiness.

Data Platform Components:

- Ingestion Layer:

- AWS Kinesis or Azure Event Hubs for streaming data ingestion, capturing thousands of learner events per second.

- Storage Foundation:

- Amazon S3 or Azure Data Lake Gen2 as the central storage for raw and processed data across all sessions, users, and content types.

- Data Processing Pipelines:

- AWS Glue and Azure Data Factory were used for both batch and streaming ETL processes.

- Data was cleansed, aggregated, and stored in structured formats (Parquet) for downstream AI training and BI reporting.

AI/ML Enablement Stack:

- Feature Store:

- Student profiles were enriched with behavioral signals: engagement heatmaps, quiz accuracy trends, response times, and drop-off patterns.

- Model Training & Deployment:

- We used Amazon SageMaker and Azure Machine Learning Studio to build, train, and deploy models:

- Drop-off Prediction Models: Scored learners in real time for disengagement risk.

- Adaptive Content Recommendation Engines: Suggested next best content based on performance and interest vectors.

- We used Amazon SageMaker and Azure Machine Learning Studio to build, train, and deploy models:

- Model Serving:

- RESTful inference APIs powered content engines and dashboards. Integrated with the frontend via GraphQL and REST SDKs.

The pipeline operated on a continuous loop—ingesting, learning, adapting—and became a powerful engine for personalized education.

Real-Time Feedback Loop in Action

To bring these AI capabilities to life, we created a real-time feedback system that operated during a live learner session.

The Flow:

- Learner watches a video and attempts an embedded quiz.

- Frontend SDKs stream event data to Kinesis/Event Hubs in <50ms.

- A real-time processing job computes the learner’s attention score, quiz accuracy, and hesitation time.

- These values are sent to the feature store and passed through the trained ML model.

- Model output determines whether the learner:

- Proceeds to the next level

- Receives a simpler explanation video

- Gets a chatbot prompt for help

- Results are sent back via inference API and the UI dynamically adjusts without page reloads.

- Simultaneously, dashboards for instructors update to show risk alerts and learning trajectories.

AWS Lambda and Azure Functions played a key role in triggering low-latency personalization responses—handling model scoring, API calls, and data syncs within milliseconds.

This loop ensured every learner interaction improved the platform’s understanding of that individual.

Outcomes & Measurable Impact

The transformation was not just architectural—it delivered tangible business and user outcomes.

- 60% Faster Release Cycles: Service independence enabled parallel development, quicker testing, and zero-downtime deployments.

- 3x Improvement in Real-Time Insights: Dashboards updated instantly with learning KPIs, helping instructors adapt content mid-course.

- 18% Boost in Course Completion: Thanks to AI-powered recommendations, learners engaged longer and finished more modules.

- <250ms Latency: Platform supported over 10,000 concurrent learners during peak periods without degradation.

- Better ML Model Accuracy: With richer, real-time data pipelines, models continuously improved via reinforcement signals.

These improvements weren’t theoretical—they directly contributed to higher learner satisfaction, reduced churn, and new revenue models based on premium AI-enabled features.

VII. Security, Compliance & Education-Grade Governance

Handling sensitive learner data—especially for K–12 and international deployments—demanded a strong security foundation.

Key Security Measures:

- User Identity Management:

- Integrated AWS Cognito and Azure B2C for federated authentication, token refresh, and MFA support.

- Role-Based Access Control (RBAC):

- Fine-grained permissions were defined for admins, instructors, students, and content editors.

- Data Governance & Compliance:

- GDPR and FERPA compliance was built-in, with consent tracking, audit trails, and right-to-forget APIs.

- Encryption at rest and in transit, automated through AWS KMS / Azure Key Vault.

All security controls were embedded into DevSecOps workflows, with pre-deployment security scanning and automated policy checks.

VIII. What’s Next: From AI to LLMs

Now that the platform has a strong cloud-native, data-driven foundation, it is ready to leverage Generative AI and LLMs.

Upcoming Initiatives:

- LLM-Powered Tutor Chatbot:

- Capable of understanding course context and learner history to answer questions with precise, level-appropriate responses.

- Auto-Generated Summaries:

- NLP models to generate personalized lesson summaries and highlight performance insights for teachers and parents.

- Multilingual Support:

- Using LLMs to translate content and interactions in real-time for global learners.

Crucially, the event-stream and ML-inference layers already in place make LLM integration lightweight. No redesign required—just model updates and API augmentations.

Key Takeaways for EdTech Leaders

- Cloud modernization isn’t just a technology upgrade—it’s the foundation for personalized, intelligent learning.

- AI features like adaptive content and dropout prediction demand real-time data architectures and event-driven design.

- You can’t simply “bolt on” ML to a legacy platform—re-architecting is essential.

- A modular, cloud-native platform creates flexibility, agility, and AI-readiness.

- Partnering with an experienced engineering team accelerates the journey, while reducing transformation risks.