I. Introduction: From Legacy to Learning AI

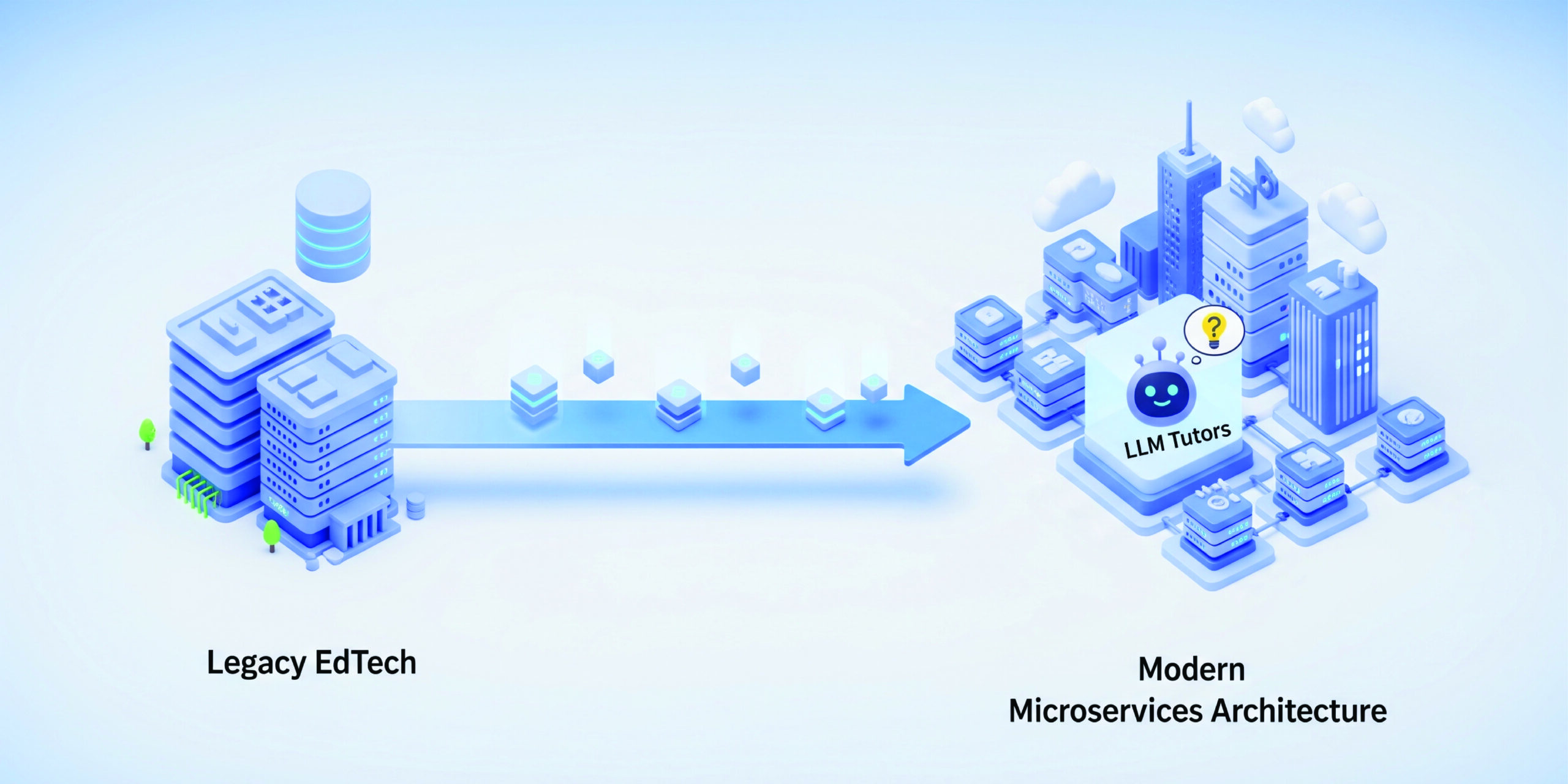

Legacy EdTech platforms, once fit for delivering standardized content, are now a roadblock to innovation. As education becomes more personalized and data-driven, platforms must support intelligent assistants, adaptive feedback, and multimodal learning paths — all powered by GenAI.

However, GenAI cannot simply be layered on top of outdated infrastructure. AI tutors, real-time personalization, and usage-aware content delivery require cloud-native, modular, and observable platforms. This blog walks through how we helped an EdTech company modernize their legacy learning system into a scalable microservices stack — and embedded LLM-powered tutors to enable intelligent learning experiences.

To enable AI features like tutoring agents or adaptive content, we needed a platform that supports container orchestration, real-time telemetry, and secure API communication. Without those, model interactions, session persistence, and usage analytics would remain impossible to scale or govern.

II. The Starting Point: A Monolithic LMS Platform

The platform we inherited was built using Java Spring, PostgreSQL, and server-rendered HTML templates — all bundled into a tightly coupled monolith. Every feature — from content delivery to assessments and user profiles — was deployed together.

Pain Points We Identified:

- Scaling limitations: A performance issue in grading slowed down the entire platform.

- Coupled feature logic: Small updates required full regression cycles.

- No API layer: No access points for third-party tools, bots, or real-time interaction.

- Zero AI-readiness: No telemetry, usage events, or external model invocation capability.

The LMS had no separation of concerns — the frontend was tightly bound to backend logic, making it impossible to adopt modern frontend frameworks like React or to expose stateless APIs via REST or gRPC. Moreover, the PostgreSQL schema followed an over-normalized pattern with limited support for analytics-optimized queries.

To enable any meaningful GenAI integration, the underlying system had to be reimagined.

III. Migration Strategy to Cloud-Native Microservices

We started by decomposing the monolith into discrete, domain-aligned services, each deployed and scaled independently.

Key Microservices:

- Content Delivery Engine – to serve video, readings, and courseware

- Assessment Module – for quizzes, evaluations, and result computation

- Engagement Tracker – logging interaction signals and patterns

- User Access Manager – handling identities, roles, and permissions

Modernization Stack:

- Azure Kubernetes Service (AKS) for container orchestration

- Azure SQL + Blob Storage for structured and media content

- CI/CD pipelines using GitHub Actions and Azure DevOps

- Observability built with Application Insights and OpenTelemetry

Each microservice was containerized using lightweight base images and deployed as isolated pods on AKS. We employed Helm charts to manage deployments and configured horizontal pod autoscaling (HPA) based on CPU and memory usage. CI/CD pipelines included environment-based configuration templating using Azure Key Vault and environment variables.

We instrumented OpenTelemetry traces into all API calls, capturing latency percentiles, error rates, and user journey traces. These were visualized using Azure Monitor Workbooks to help identify bottlenecks and monitor long-term performance.

IV. LLM-Ready Architecture: Why the Migration Was Crucial

The shift to microservices unlocked capabilities that were previously impossible. Each service now exposed lightweight, stateless APIs that could interact with external tools — including GenAI modules.

We introduced a Learning Intelligence Layer, which orchestrated LLM interactions in real time. This layer supported:

- Event-driven feedback loops triggered by quiz attempts or engagement drops

- Contextual prompt generation using lesson data, interaction logs, and performance history

- External inference integration via Azure OpenAI’s managed GPT-4 endpoints

The prompt orchestration engine fetched student session data from Cosmos DB, enriched it using a Jinja2-based templating system, and routed it through our internal LLM Gateway for model inference. This gateway handled rate limits, fallback logic, and telemetry tagging across sessions — enabling end-to-end traceability.

V. LLM Tutor Integration — How It Works

With the orchestration and API layers in place, we launched the LLM Tutor — an embedded, context-aware agent designed to support students as they learn.

Core Capabilities:

- Answer Explanation: After a wrong quiz answer, the tutor offers conceptual clarification.

- On-Demand Help: Students can highlight confusing text or concepts and ask for simplification.

- Hint Generation: Rather than revealing answers, the tutor scaffolds learning with contextual hints.

- Mini Recaps: Summarizes a lesson’s core ideas after completion, reinforcing retention.

Implementation Highlights:

- The Tutor API dynamically assembles the context (lesson ID, user history, quiz attempts) to build a tailored prompt.

- The prompt is processed through Azure OpenAI and returned via our LLM Gateway, with every call tagged for observability and performance metrics.

- Redis caching is applied to recent prompt-response pairs with a 15-minute TTL to balance performance and freshness.

The Tutor API also enforces token-based authentication and role-level access using Azure Active Directory, ensuring secure and role-scoped access to LLM functions.

Guardrails & Escalation:

- All prompt inputs are filtered using the Detoxify library for offensive or unsafe language.

- Responses are screened through regex-based content auditors for compliance, tone, and instructional quality.

- When the model detects high uncertainty, a soft escalation flow is triggered via Azure Functions, guiding students to a curated help resource or a teacher follow-up.

Educator Controls:

Teachers receive dashboards summarizing tutor interactions — showing most-asked questions, flagged answers, and trends in student confusion. They can:

- Flag or correct inaccurate model outputs

- Add curated examples or FAQ prompts

- View anonymized logs for feedback tuning

This ensures the tutor evolves with the instructional ecosystem and supports educator oversight.

VI. Measurable Impact

The LLM Tutor wasn’t a speculative feature — it created measurable improvements in both engagement and operational efficiency.

Quantified Outcomes:

- 23% increase in student engagement time (measured via session duration and scroll depth)

- 64% of student questions resolved by the LLM Tutor without requiring instructor follow-up

- 18% improvement in feedback scores from pilot cohorts using the AI-enabled system

- Platform stability and uptime improved significantly, with microservice failures no longer cascading across the system

Engagement metrics were tracked using Application Insights SDK embedded in the frontend. We correlated tutor interactions with engagement spikes using Kusto queries over session telemetry. Escalation vs. resolution was tracked through tagged interaction outcomes logged by the LLM Gateway.

VII. Governance & Safety in GenAI Usage

We treated AI governance not as an afterthought but as a first-class concern.

Controls & Policies Implemented:

- Prompt inputs passed through Detoxify profanity filters and regex-based content sanitizers.

- All interactions were logged, versioned, and audit-ready for educator or administrator review.

- The tutor was constrained to subject-specific prompt templates, with no access to open-ended generation.

- All student prompts were anonymized before being sent to Azure OpenAI to ensure compliance with FERPA and other privacy norms.

These measures ensured the tutor could operate safely across K–12 and higher-ed environments without reputational or legal risk.

VIII. Ongoing Optimization

We continue to improve the tutor and its underlying platform based on real-world usage and educator feedback.

Current Enhancements:

- Prompt tuning and re-ranking based on logged student interactions

- Model benchmarking to evaluate GPT-4 vs. Claude vs. open-source LLMs for cost and latency trade-offs

- Multilingual support (e.g., Spanish and Hindi) using localized prompt templates and fallback translation logic

- Voice-based tutor pilots using Azure Cognitive Services for speech-to-text and audio output

Every improvement is tracked via usage metrics and learning outcome correlations, ensuring we invest in what works.

IX. Takeaways for EdTech Leaders

If you’re running a legacy LMS and considering AI, here’s what we learned:

- Modernization is non-negotiable: You can’t add LLMs to a monolith. APIs, containers, and telemetry are prerequisites.

- LLMs need orchestration, not just endpoints: Prompt quality, context handling, and output review pipelines matter as much as model selection.

- Governance builds trust: Safety filters, educator dashboards, and transparent logging are critical for institutional buy-in.

- Real-world ROI is attainable: With the right architecture, AI improves engagement, reduces support load, and creates stickier learning experiences.